학교 선생님께서 자율주행 관련한 프로젝트가 있다고 하시면서 대회를 추천해 주셔서 자율주행과 관련된 대회에 나가게 되었다.

대회 관련 내용은 다음과 같다.

SW미래채움 사업 소개

글로벌SW·AI교육 프로그램 개요

◎ 프로그램 운영 목적

국내 고등학생들에게 미국 대학의 현지 교수를 초빙하여 미국 예비 대학생들 대상으로 시행하는 프로그램과 동일한 프로그램으로 교육하여, 글로벌 기준에서 SW활용 능력을 키우고자 합니다.

분야 프로그램 개요 주강사

| 데이터 사이언스 | 미국 대학의 비전공자와 예비 전공자를 대상으로 교육중인 데이터 중심 컴퓨터 사이언스(CS) 기초과정을 한국의 고등학생들에 맞춰 재구성한 프로그램 | 클레어몬트멕케나칼리지 박제호교수 |

| 인공지능 자율주행 | 라즈베리파이를 통해 컴퓨터 구조와 피지컬 컴퓨팅을 이해하고, 파이썬 중심의 인공지능을 접목하여, 자율주행 자동차를 조립·제어할 수 있도록 구성한 프로그램 | 노스이스턴대학 이정규 교수 |

◎ 교육 추진 방법

- 온라인 정규 교육(8~10월)

- 오프라인 실습 교육 및 지역 예선(10~11월)

- 챌린지 본선 대회 운영 및 시상 (11월 26일(목)~28일(토) 예정)

◎ 시상 규모

- 과학기술정보통신부장관상 : 부문별 각 2점(총 4점)

- 정보통신산업진흥원장상 : 부문별 각 2점(총 4점)

여기의 데이터 사이언스와 인공지능 자율주행이라는 두 주제가 있었고, 그 중에 인공지능 자율주행을 선택하여 돟아리 후배와 함께 대회를 나가보았다.

세세한 과정은 다음과 같다.

교육 개요

🟩교육 내용

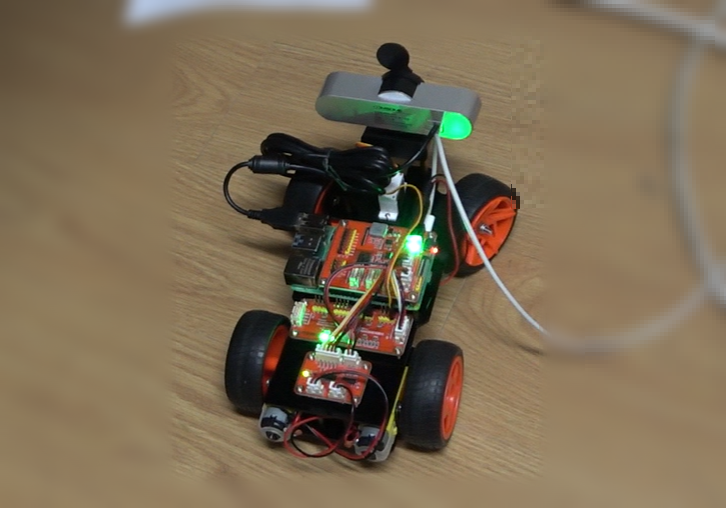

라즈베리 파이를 통해 컴퓨터 구조와 피지컬 컴퓨팅을 이해하고, 파이썬 중심의 인공지능을 접목하여, 자율주행 자동차를 조립·제어할 수 있도록 구성한 프로그램

🟩프로그램 구성

(1) 교육 목표

- 하드스킬(Hard skill)과 소프트스킬(Soft skill)교육을 동시에 진행하여, 학생들의 AI분야에 대한 관심도 향상 및 진로 방향 설정 도움

(2) 온라인 교육 (토요일 4시간 x 4회)

- CS 기본, 라즈베리 파이, 파이썬 프로그래밍 등 소프트 스킬 및 자율주행 자동차 조립 등

(3) 오프라인 교육 (~8시간, 팀별 일정 협의 후 멘토 방문 교육)

- 캠스톤 디자인, TA 멘토 조언 등을 통해 최종 프리젠테이션 준비

(4) 지역 예선

- 담당 교수님의 종합 평가 (참여도, 이해도, 적극성, 발표 등) 및 미션 수행 (10~11월 중)

- 지역 예선을 통과한 1팀 챌린지 참가 역 예선을 통과한 1팀 챌린지 참가 (11월 24~26일)

🟩강사진 : 이정규 교수 (Northeastern University CS) / 조교 참가

🟩언어 : 주 언어 영어+ 한국어

🟩주요 커리큘럼

컴퓨터 이해 (라이브 + 온라인) : 라즈베리 파이

자율 주행차 조립 및 실습 (대면) : 썬 파운드 + 머신 러닝

인공지능 프로젝트 (온라인) : 소프트 스킬 + 업그레이드 및 최종 발표

🟩교구

자율 주행차 키트 (팀당 1대) 제공

온라인 교육 세부 내역

🟩교육 시간 : 오전 10시부터 오후 2시 (1회, 4교시)

- 교육 시간 10시 정각 출석 확인 예정

- 실시간 온라인 교육 참여가 불가능할 때는 전날까지 운영국으로 연락해주세요.

🟩교육 세부 일정

- (파이썬 기초 교육) 8월 27일 (토) - 교육 희망자만 수강 (다시 보기 제공)

결과적으로 우리의 관문들은 다음과 같았다.

1. 온라인 강의 수강 후 익히기

2. 스스로 하나의 video log 제출 후 평가

3. 2.에서 합격한 팀은 추후 오프라인 대회장으로 이동 후 심사

1의 과정은 이 교육의 자체적인 프로그램이었으므로, 생략하고 2의 과정에서 했던 것들 위주로 작성하고자 한다.

작성에 앞서 관련 파일들을 올린 깃헙 링크는 다음과 같다.

https://github.com/MOSW626/hey-dobby-driver.git

GitHub - MOSW626/hey-dobby-driver: this repository for sw.ai education

this repository for sw.ai education. Contribute to MOSW626/hey-dobby-driver development by creating an account on GitHub.

github.com

이제 자체적으로 진행한 프로그래밍에 대해서 설명하고자 한다.

1. 본래의 프로그래밍

: 원래 있던 프로그래밍은 바닥을 인식하고, stop sign인지 아닌지를 판별하는 코드이다.

# USAGE

# python stop_detector.py

# import the necessary packages

from keras.preprocessing.image import img_to_array

from keras.models import load_model

import tensorflow as tf

from imutils.video import VideoStream

from threading import Thread

import numpy as np

import imutils

import time

import cv2

import os

# define the paths to the Not STOP-NoT-STOP deep learning model

MODEL_PATH = "./models/stop_not_stop.model"

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)

# initialize the total number of frames that *consecutively* contain

# stop sign along with threshold required to trigger the sign alarm

TOTAL_CONSEC = 0

TOTAL_THRESH = 20

# initialize is the sign alarm has been triggered

STOP = False

# load the model

print("[INFO] loading model...")

model = load_model(MODEL_PATH)

# initialize the video stream and allow the camera sensor to warm up

print("[INFO] starting video stream...")

# vs = VideoStream(src=0).start()

# vs = VideoStream(usePiCamera=True).start()

vs = cv2.VideoCapture(-1)

vs.set(cv2.CAP_PROP_FRAME_WIDTH, 320)

vs.set(cv2.CAP_PROP_FRAME_HEIGHT, 240)

time.sleep(2.0)

# loop over the frames from the video stream

while True:

# grab the frame from the threaded video stream and resize it

# to have a maximum width of 320 pixels

ret, frame = vs.read()

frame = imutils.resize(frame, width=320)

# prepare the image to be classified by our deep learning network

image = frame[60:120, 240:320]

image = cv2.resize(image , (28, 28))

image = image.astype("float") / 255.0

image = img_to_array(image)

image = np.expand_dims(image, axis=0)

# classify the input image and initialize the label and

# probability of the prediction

(notStop, stop) = model.predict(image)[0]

label = "Not Stop"

proba = notStop

# check to see if stop sign was detected using our convolutional

# neural network

if stop > notStop:

# update the label and prediction probability

label = "Stop"

proba = stop

# increment the total number of consecutive frames that

# contain stop

TOTAL_CONSEC += 1

# check to see if we should raise the stop sign alarm

if not STOP and TOTAL_CONSEC >= TOTAL_THRESH:

# indicate that stop has been found

STOP = True

print("Stop Sign...")

# otherwise, reset the total number of consecutive frames and the

# stop sign alarm

else:

TOTAL_CONSEC = 0

STOP = False

# build the label and draw it on the frame

label = "{}: {:.2f}%".format(label, proba * 100)

frame = cv2.putText(frame, label, (10, 25),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

frame = cv2.rectangle(frame, (240, 60),(320,120), (0,0,255), 2)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# do a bit of cleanup

print("[INFO] cleaning up...")

cv2.destroyAllWindows()

vs.release()

이러한 방식의 코드를 바꾸는 과정을 진행했다.

2. 프로젝트 진행

어떤 방식으로 진행을 하면 좋을 지에 대해서 토의를 진행했고, 그 결과 stop sign을 인식하는 데에는 성공했기 때문에 다른 표지판들을 인식하여 우리가 만든 공간에서 주행을 진행을 하는 목표를 세웠다.

이 과정을 통해서 앞에서 label과 proba가 1가지 뿐이었던 것을 여러개의 라벨을 붙여주었고, 동일하게 학습시키기 위해서 test_network파일과 train_network 파일을 수정하였다.

다음 코드는 그렇게 수정한 sign_detector 파일이다.

# USAGE

# python stop_detector.py

# import the necessary packages

from keras.preprocessing.image import img_to_array

from keras.models import load_model

from imutils.video import VideoStream

from threading import Thread

import numpy as np

import imutils

import time

import cv2

import os

# define the paths to the Not Santa Keras deep learning model and

# audio file

MODEL_PATH = "traffic_sign.model"

#MODEL_PATH = "aa.tflite"

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

# initialize the total number of frames that *consecutively* contain

# stop sign along with threshold required to trigger the sign alarm

TOTAL_CONSEC = 0

TOTAL_THRESH = 20

# initialize is the sign alarm has been triggered

STOP = False

# load the model

print("[INFO] loading model...")

model = load_model(MODEL_PATH)

# initialize the video stream and allow the camera sensor to warm up

print("[INFO] starting video stream...")

vs = VideoStream(src=0).start()

# vs = VideoStream(usePiCamera=True).start()

time.sleep(2.0)

# loop over the frames from the video stream

while True:

# grab the frame from the threaded video stream and resize it

# to have a maximum width of 320 pixels

frame = vs.read()

frame = imutils.resize(frame, width=320)

# prepare the image to be classified by our deep learning network

image = frame[60:120, 240:320]

image = cv2.resize(image , (28, 28))

image = image.astype("float") / 255.0

image = img_to_array(image)

image = np.expand_dims(image, axis=0)

# classify the input image and initialize the label and

# probability of the prediction

(uturn, parking, speed60, speed30, turnleft, stop, road) = model.predict(image)[0]

label = "Not Stop"

proba = notStop

# check to see if stop sign was detected using our convolutional

# neural network

if stop > notStop:

# update the label and prediction probability

label = "Stop"

proba = stop

# increment the total number of consecutive frames that

# contain stop

TOTAL_CONSEC += 1

# check to see if we should raise the stop sign alarm

if not STOP and TOTAL_CONSEC >= TOTAL_THRESH:

# indicate that stop has been found

STOP = True

print("Stop Sign...")

# otherwise, reset the total number of consecutive frames and the

# stop sign alarm

else:

TOTAL_CONSEC = 0

STOP = False

# build the label and draw it on the frame

label = "{}: {:.2f}%".format(label, proba * 100)

frame = cv2.putText(frame, label, (10, 25),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

frame = cv2.rectangle(frame, (240, 60), (320, 120), (0,0,255), 2)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# do a bit of cleanup

print("[INFO] cleaning up...")

cv2.destroyAllWindows()

vs.stop()

또한 train하는 과정에서 다음과 같이 라벨링을 진행하는 코드를 작성하였다.

# USAGE

# python train_network.py --dataset images --model stop_not_stop.model

# set the matplotlib backend so figures can be saved in the background

import matplotlib

#matplotlib.use("Agg")

# import the necessary packages

from tensorflow import keras

from keras.preprocessing.image import ImageDataGenerator

from keras.optimizers import Adam

from sklearn.model_selection import train_test_split

from keras.preprocessing.image import img_to_array

from keras.utils import to_categorical

from lenet import LeNet

from imutils import paths

import matplotlib.pyplot as plt

import numpy as np

import argparse

import random

import cv2

import os

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-d", "--dataset", required=True,

help="path to input dataset")

ap.add_argument("-m", "--model", required=True,

help="path to output model")

ap.add_argument("-p", "--plot", type=str, default="plot.png",

help="path to output loss/accuracy plot")

args = vars(ap.parse_args())

# initialize the number of epochs to train for, initia learning rate,

# and batch size

EPOCHS = 25 # 반복 횟수

INIT_LR = 1e-3 # 러닝 레잇

BS = 32 # 한꺼번에 학습 개수

# initialize the data and labels

print("[INFO] loading images...")

data = []

labels = []

# grab the image paths and randomly shuffle them

imagePaths = sorted(list(paths.list_images(args["dataset"])))

random.seed(42)

random.shuffle(imagePaths)

# loop over the input images

for imagePath in imagePaths:

# load the image, pre-process it, and store it in the data list

image = cv2.imread(imagePath)

image = cv2.resize(image, (28, 28))

image = img_to_array(image)

data.append(image)

# extract the class label from the image path and update the

# labels list

label = imagePath.split(os.path.sep)[-2]

if label == "uturn":

label = 6

elif label == "parking":

label = 5

elif label == "speed_60":

label = 4

elif label == "speed_30":

label = 3

elif label == "turnleft":

label = 2

elif label == "stop":

label = 1

else:

label = 0

labels.append(label)

# scale the raw pixel intensities to the range [0, 1]

data = np.array(data, dtype="float") / 255.0

labels = np.array(labels)

# partition the data into training and testing splits using 75% of

# the data for training and the remaining 25% for testing

(trainX, testX, trainY, testY) = train_test_split(data,

labels, test_size=0.25, random_state=42)

# convert the labels from integers to vectors

trainY = to_categorical(trainY, num_classes=7) # class : 테스트 데이터 양 > 늘리면 됨

testY = to_categorical(testY, num_classes=7)

# construct the image generator for data augmentation

aug = ImageDataGenerator(rotation_range=30, width_shift_range=0.1,

height_shift_range=0.1, shear_range=0.2, zoom_range=0.2,

horizontal_flip=True, fill_mode="nearest")

# initialize the model

print("[INFO] compiling model...")

model = LeNet.build(width=28, height=28, depth=3, classes=7) # depth와 classes 를 건드려보자

opt = Adam(lr=INIT_LR, decay=INIT_LR / EPOCHS)

model.compile(loss="binary_crossentropy", optimizer=opt,

metrics=["accuracy"])

# train the network

print("[INFO] training network...")

H = model.fit_generator(aug.flow(trainX, trainY, batch_size=BS),

validation_data=(testX, testY), steps_per_epoch=len(trainX) // BS,

epochs=EPOCHS, verbose=1)

# save the model to disk

print("[INFO] serializing network...")

model.save(args["model"])

# plot the training loss and accuracy

plt.style.use("ggplot")

plt.figure()

N = EPOCHS

plt.plot(np.arange(0, N), H.history["loss"], label="train_loss")

plt.plot(np.arange(0, N), H.history["val_loss"], label="val_loss")

plt.plot(np.arange(0, N), H.history["accuracy"], label="train_acc")

plt.plot(np.arange(0, N), H.history["val_accuracy"], label="val_acc")

plt.title("Training Loss and Accuracy on Traffic sign")

plt.xlabel("Epoch #")

plt.ylabel("Loss/Accuracy")

plt.legend(loc="lower left")

#plt.savefig(args["plot"])

plt.show()

이 과정을 통해서 자동차를 프로그래밍했고, 이후 공간을 만들어 자율주행을 돌려보았다.

3. Video Log

제출해야 하는 파일은 다음 링크에 올려두었다.

이 과정을 끝으로 모든 프로젝트가 일단 끝이 났고, 평가를 기다리게 되었다.

4. 마무리

하지만 아쉽게도 떨어졌다는 문자가 왔고, 우리 팀은 둘 다 일정이 생겨 일본 여행과 싱가포르 여행을 떠날 예정이었다.

그런데 갑자기 추가 합격이라는 문자가 왔다.

아쉽게도 일정이 있어서 참여하지 못하였지만, 굉장히 많은 것을 배우고 유의미한 시간이었다고 생각한다.

'탐구 💾 > 2022 탐구 이야기' 카테고리의 다른 글

| [22' 전람회] 충북과학전람회 (2) - 줄다리기를 역학적으로 알아보자 (0) | 2023.03.01 |

|---|---|

| [22' 전람회] 충북과학전람회 (1) - 다시 한번 시작하다, (0) | 2023.03.01 |

| [22' Science Level Up] 행성 탐사 프로젝트 로봇 제작, 코딩 그리고 결과 (0) | 2023.02.27 |

| [22' Science Level Up] 행성 탐사 프로젝트 시작하기 (0) | 2023.02.26 |

| [22' YSC] 택배 배달 프로젝트(2) (0) | 2023.02.25 |